Wheatstone for Radio

Wheatstone is ubiquitous in the radio industry. Since our early days, we've been making consoles that take radio further. Our networking set the standard for the industry, leading the way to modern AoIP networks. Our open architecture lets us partner with other industry folks to provide solutions that open doors for functionality. Our virtual tools provide solutions that are customizable to address just about any application you can think of. And our processing sets new standards for audio delivery.

We are constantly at it, developing tools that allow radio to address the need to be in many locations at once while maintaining control specific to their workflows from wherever they happen to be. In short, we are your Broadcast Perfectionists.

Wheatstone Digital Networkable Radio Consoles

Build/control/extend your network virtually. Deploy multiple software mix engines and multitouch glass controllers, processors, and streaming processors from one or multiple servers to streamline your workflow. Layers is the Wheatstone Bridge between the virtual and physical worlds and can make the transition (including hybrid studios) a reality today.

Sideboard and Talent Stations

With our Talent Stations and Sideboards, Wheatstone has created network appliances that fit anyplace you need them. Dial up sources or destinations on your network for news reporters, interviewees, guests, etc. Their low profile, minimal design make them unobtrusive and easy to use.

Audioarts Digital Networkable Radio Consoles

Audioarts Standalone Radio Consoles

WheatNet-IP Networking

WheatNet-IP is a network system that utilizes Internet Protocol to enable audio to be intelligently distributed to devices across scaleable networks. It enables all audio sources to be available to all devices (such as mixing consoles, control surfaces, software controllers, automation devices, etc) and controlled from any and all devices. WheatNet-IP is AES67 compatible, yet is unique in that it represents an entire end-to-end solution, complete with audio transport, full control, and a toolset to enable exceptionally intelligent deployment and operation.

Stagebox One

The 4RU StageBox One extends console I/O, providing 32 mic/line inputs, 16 analog line outputs, and 8 AES3 inputs and 8 AES3 outputs as well as 12 logic ports and two Ethernet ports. Its heavy duty construction makes it adept for on-the-go applications, such as remote sporting events. StageBox One works with all WheatNet-IP audio networked consoles.

BLADES (including Blade 4 preview)

BLADES are the interfaces used to build a WheatNet-IP network. Each BLADE has enough functionality to be a complete radio studio. I/O, Processing, Routing, exceptional control over all aspects of your network, AES67 compatibility for working with other networks – a world of power in each BLADE.

VoxPro Record/Edit/Playback in Real Time

Software

On-Air Broadcast and Mic/Voice Processors

Wheatstone is different. Radio stations that use our processors know that they can reach more listeners, due to our multipath mitigation controls. They know that they can build a more compelling sound - cleaner and louder - to keep that increased listenership from tuning out. In a market with so many processors to choose from, people who check out Wheatstone processing never go back to the status quo.

To learn more, simply click on an image to be connected to its product page on our website.

On-Air Processors

SG-192 Stereo Generator

The SG-192 is the first standalone FM stereo generator capable of passing full AES MPX composite baseband to the exciter, including RDS and SCA up to 67kHz, and is equipped with an intelligent stereo multipath controller which helps mitigate the effects of multipath-induced receiver blending.

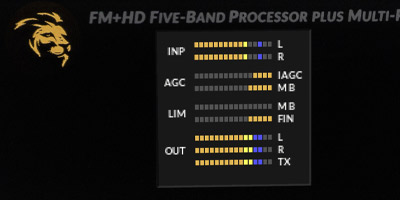

NEW: Audioarts LiON FM Processor

The AUDIOARTS LION Five-Band Processor/Multipath Controller has WheatNet-IP, so it can be networked. It has analog and AES3 so it can stand alone. It has Wheatstone SystemLink™ built in, to send full 24-bit linear audio directly to your transmitter over reliable high-speed links — Baseband 192 MPX with FM+HD timing locked (no codec to degrade audio quality). And it comes with 50 presets so you can plug and play.

MPX SystemLink™

MPX SystemLink is an optional 1RU product for the X5 that maintains HD and FM alignment (LiveLock) from your studio to your transmitter site. It carefully keeps the HD and FM packets in sync so time alignment done with the processor at the studio is maintained straight through to the receiver.

Mic/Voice Processors

M4-IP USB – 4-Channel Networkable Mic/Voice Processor BLADE-3

Combines four high-quality microphone preamps, four channels of microphone processing, four independent USB ports, and a WheatNet-IP BLADE interface, allowing you to place four microphone inputs anywhere in your WheatNet-IP Intelligent Network (although it also works just fine as a standalone processor).

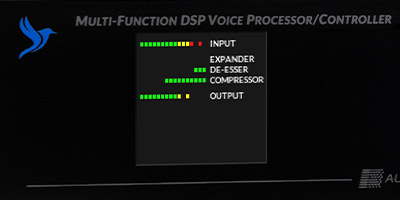

MG-1 Mic/Voice Processor

The MG-1 is a flexible digital microphone processor that offers all the control parameters of its M-1 predecessor, but with all audio control parameters set via an associated GUI rather than front panel controls. It offers unlimited presets, security and networkability in an all-digital framework, with parameters that are easy to set up from the GUI - to give each voice talent his or her own personal sound at the press of a button.

NEW: Audioarts Voice 1 Processor

THE AUDIOARTS VOICE 1 has all the tools and secret sauce of the Wheatstone M-1 microphone processor. But it’s got more: WheatNet-IP, AES67, remote GUI control, password protection, real time clock and presets—complete with scheduler. It can be controlled from the OLED display and, of course, your desktop computer.